Updated on February 1, 2024 to show a demo video of how the feature works on the Google Pixel 8.

Google has launched its new Circle to Search feature, which was announced as part of a host of AI features for the new Samsung Galaxy S24 Series phones. But it will be available in other premium Android devices, too.

Google is testing various ways to enhance search through its powerful AI tools. You can already search by voice and with the camera using Lens. The company has been testing how generative AI can be used to understand natural language as well. The two latest features in this space include Circle to Search and an AI-powered multi-search experience.

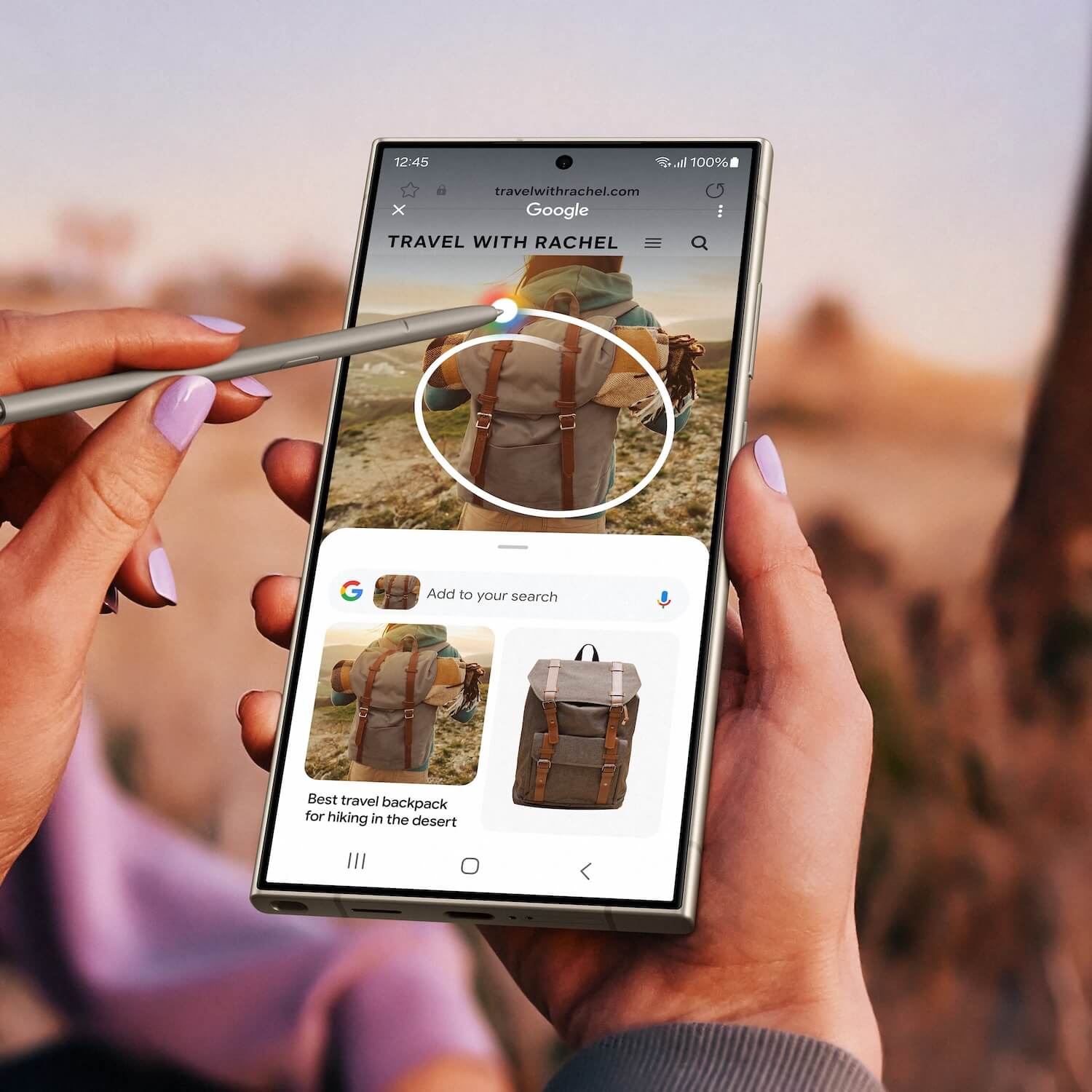

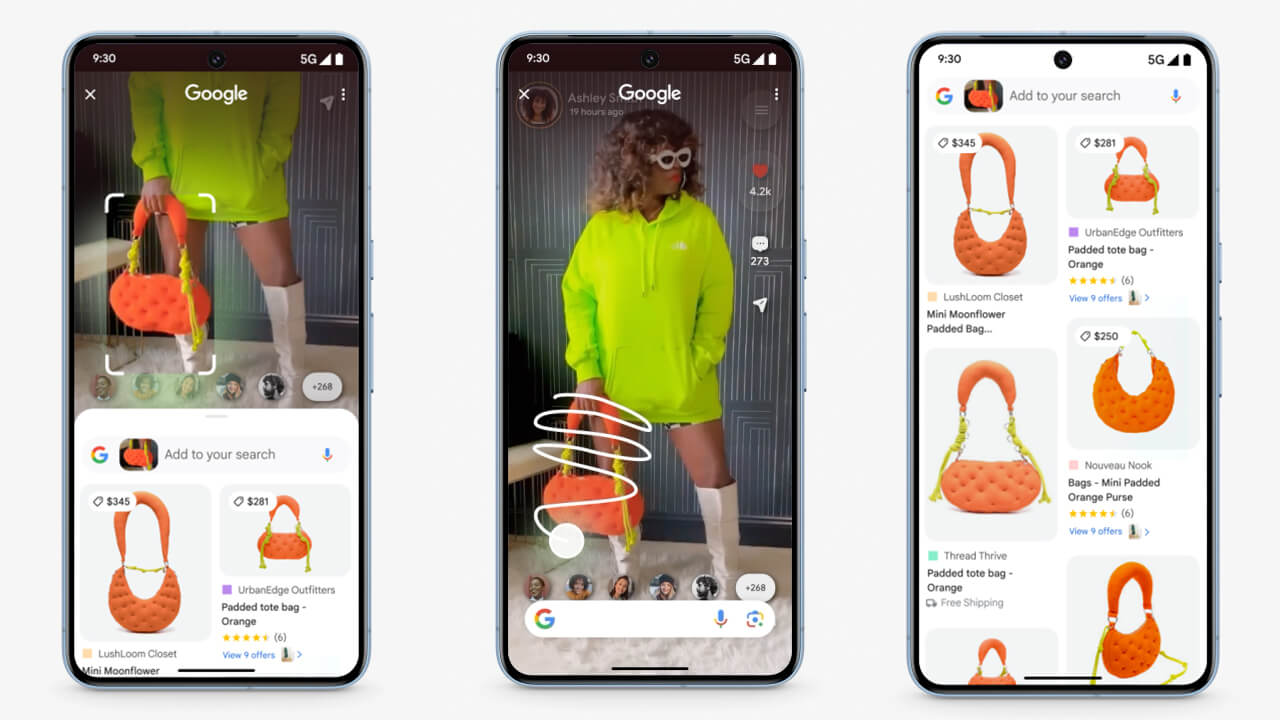

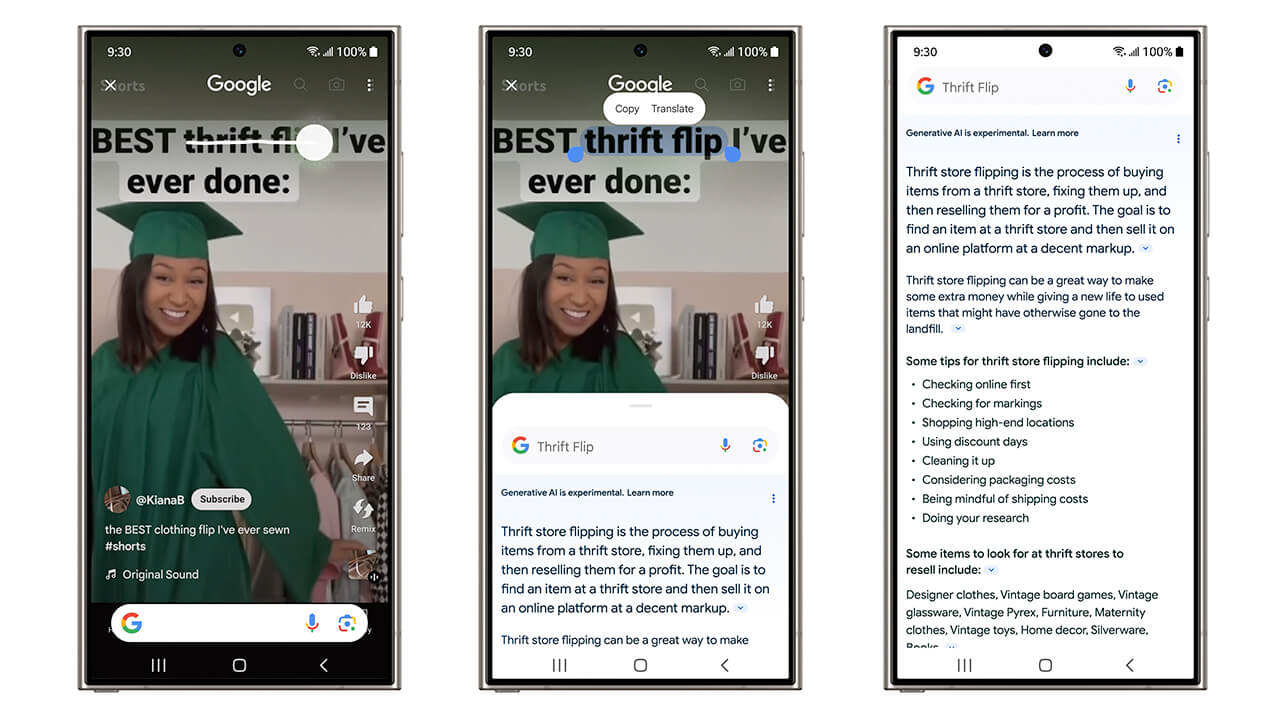

Circle to Search doesn’t just require circling: you can also highlight or even scribble on an image or part of an image on your phone’s screen to call up a search window without having to leave the app. Long press the home button or navigation bar on your Android phone to activate Circle to Search. From there, you can select any item that you see and want to know more about using your preferred gesture. Use a simple gesture to select images, text, or videos: you can circle, highlight, scribble, or tap, based on what feels most natural to you. It might be a purse someone is holding in a photo, a delicious dish that appears on a website, or a specific item in your social feed.

The feature is launching starting January 31, 2023 on not just the Galaxy S24 Series phones, but also the Pixel 8 and Pixel 8 Pro. It will be available globally for “select premium Android phones” shortly after. Search and Shopping ads will continue to appear in dedicated ad slots throughout the results page.

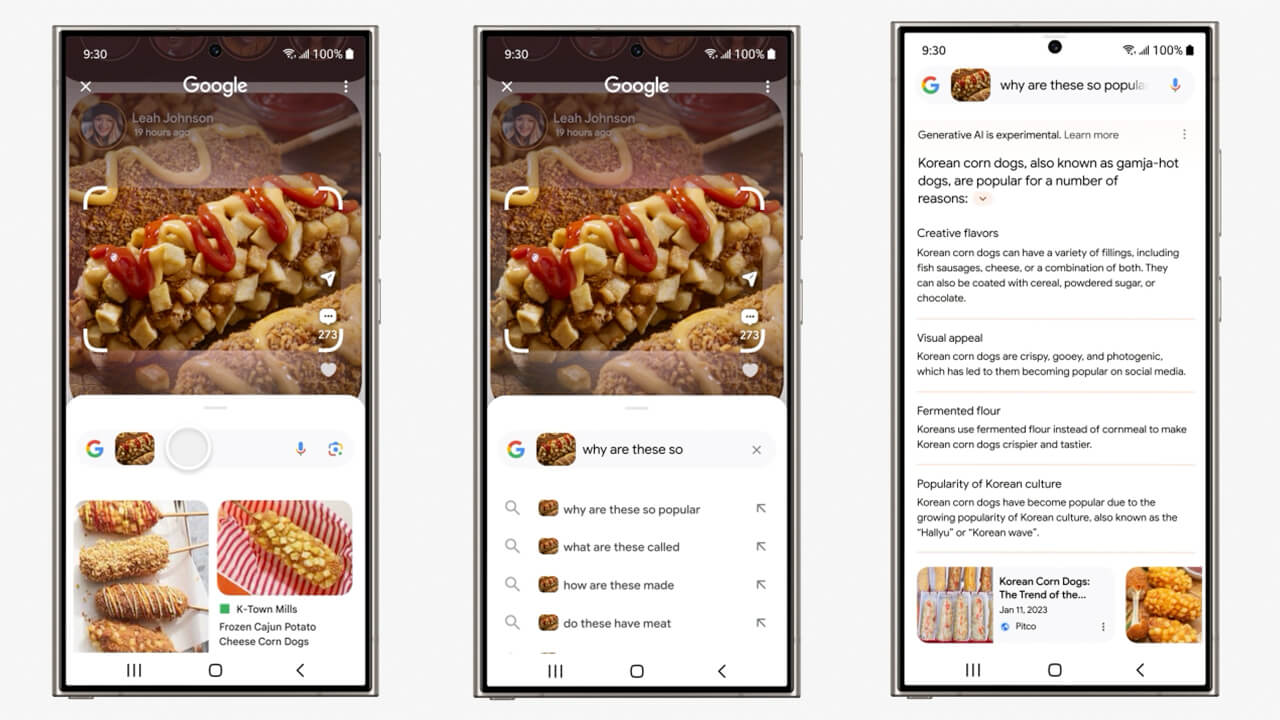

Taking this a step further is multi-search in Lens, which now goes beyond just helping you search for a photo and adding a word, like a specific colour, to further refine the results. Now, thanks to advance in generative AI, you can point your camera or upload a photo or screenshot and ask a question using the Google app. This goes beyond the usual visual matches but can provide more context to complex or nuanced questions.

An example might be pointing your camera at a board game at a yard sale to ask more about it and how it’s played. Or select the image of a specific item of food that seems to be trending to learn more about it. This can even work in videos, like YouTube shorts.

AI-powered overviews on multi-search results are launching this week in English in the U.S. for everyone, without the need for enrollment in Search Labs. To get started, look for the Lens camera icon in the Google app for Android or iOS. If you’re outside the U.S. and opted into SGE, you can preview this new experience in the Google app. You’ll also be able to access AI-powered overviews on multi-search results within Circle to Search.

These advances are all part of Google’s testing over the last year through its Search Generative Experience (SGE) in Search Labs.